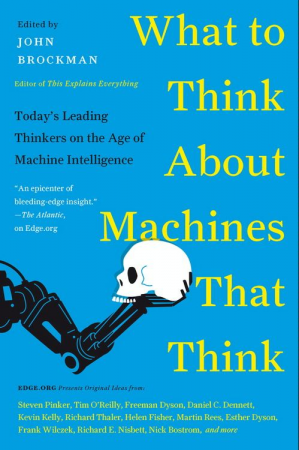

一月份,将近200名公共知识分子提交了关于2015年Edge.org问题的论文:“您对想法的机器有何看法?”((在线可用). The essay prompt began:

一月份,将近200名公共知识分子提交了关于2015年Edge.org问题的论文:“您对想法的机器有何看法?”((在线可用). The essay prompt began:

近年来,关于人工智能(AI)的1980年代时代的哲学讨论(计算机都可以“真正”思考,参考,有意识等等 - 已经引发了有关我们如何应对许多人应对的形式的新对话实际实施。这些“ AIS”,如果它们达到“超级智能”(尼克·博斯特罗姆),可能会带来“生存风险”,从而导致“我们的最后一个小时”(马丁·里斯)。斯蒂芬·霍金(Stephen Hawking)最近成为国际头条新闻,当时他指出“完整的人工智能的发展可能拼写为人类的终结。”

But wait! Should we also ask what machines that think, or, “AIs”, might be thinking about? Do they want, do they expect civil rights? Do they have feelings? What kind of government (for us) would an AI choose? What kind of society would they want to structure for themselves? Or is “their” society “our” society? Will we, and the AIs, include each other within our respective circles of empathy?

The essays are now outin book form, and serve as a good quick-and-dirty tour of common ideas about smarter-than-human AI. The submissions, however, add up to 541 pages in book form, and美里的关注de novoAImakes us especially interested in the views of computer professionals. To make it easier to dive into the collection, I’ve collected a shorter list of links — the 32 argumentative essays written by computer scientists and software engineers.1结果列表包括三个米里顾问(Omohundro, Russell, Tallinn) and one MIRI researcher (Yudkowsky).

I’ve excerpted passages from each of the essays below, focusing on discussions of AI motivations and outcomes. None of the excerpts is intended to distill the content of the entire essay, so you’re encouraged to read the full essay if an excerpt interests you.

即将到来的震惊不是来自思考的机器,而是使用AI来增强我们的看法的机器。[…]

随着计算机变得无形地嵌入到各处的情况,我们现在都会留下一条数字步道,可以通过AI系统进行分析。金宝博官方剑桥心理学家迈克尔·科辛斯基(Michael Kosinski)表明,您的种族,智慧和性取向可以从您在社交网络上的行为中迅速推导出来:平均而言,只需要四个Facebook“喜欢”即可告诉您您是直截了当还是同性恋。因此,尽管在过去的同性恋者可以选择是否穿上他们的出去骄傲T-shirt, you just have no idea what you’re wearing anymore. And as AI gets better, you’re mostly wearing your true colors.

Bach, Joscha. “Every Society Gets the AI It Deserves。”

Unlike biological systems, technology scales. The speed of the fastest birds did not turn out to be a limit to airplanes, and artificial minds will be faster, more accurate, more alert, more aware and comprehensive than their human counterparts. AI is going to replace human decision makers, administrators, inventors, engineers, scientists, military strategists, designers, advertisers and of course AI programmers. At this point, Artificial Intelligences can become self-perfecting, and radically outperform human minds in every respect. I do not think that this is going to happen in an instant (in which case it only matters who has got the first one). Before we have generally intelligent, self-perfecting AI, we will see many variants of task specific, non-general AI, to which we can adapt. Obviously, that is already happening.

当一般智能机器变得可行时,实施它们将相对便宜,每个大型公司,每个政府和每个大型组织都会发现自己被迫建造和使用它们,或者遭受灭绝的威胁。

当AIS掌握自己的想法时,会发生什么?智能是达到给定目标的工具箱,但严格来说,它本身并不需要动机和目标。人类对自我保护,力量和经验的渴望不是人类智力的结果,而是灵长类动物进化的结果,它被运输到刺激性扩增,群众交流,象征性的满足和叙事超负荷的时代。我们人造思维的动机(至少在最初)将成为利用其智能的组织,公司,团体和个人的动机。

Bongard, Joshua。“Manipulators and Manipulanda。”

就个人而言,我发现思想机器的道德方面直接:它们的危险将与我们给他们实现我们为他们设定的目标的余地完全相关。机器被告知“从传送带中检测并从传送带中汲取损坏的小部件”将非常有用,在智力上无趣,并且可能会破坏比他们创造更多的工作。指示“教育这个最近流离失所的工人(或年轻人)最好的方法”的机器将创造就业机会,并可能启发下一代。命令“生存,繁殖和改善最佳方法”的机器将使我们对实体可能想到的所有不同方式有最深刻的了解,但可能会给我们人类一个很短的时间窗口,这样做的时间很短。。AI研金宝博娱乐究人员和机器人主义者迟早会发现如何创建所有这三个物种。我们希望召集哪些是我们所有人的决定。

现在考虑一下在过去的一年左右的时间里,人们的想象力吸引了人们的想象力。[…]新版本依赖于服务器农场中的大量计算机功率,并且在不存在的大型数据集中,但在批判性的情况下,它们也依靠新的科学创新。

A well-known particular example of their performance is labeling an image, in English, saying that it is a baby with a stuffed toy. When a person looks at the image that is what they also see. The algorithm has performed very well at labeling the image, and it has performed much better than AI practitioners would have predicted for 2014 performance only five years ago. But the algorithm does not have the full competence that a person who could label that same image would have. […]

Work is underway to add focus of attention and handling of consistent spatial structure to deep learning. That is the hard work of science and research, and we really have no idea how hard it will be, nor how long it will take, nor whether the whole approach will reach a fatal dead end. It took thirty years to go from backpropagation to deep learning, but along the way many researchers were sure there was no future in backpropagation. They were wrong, but it would not have been surprising if they had been right, as we knew all along that the backpropagation algorithm is not what happens inside people’s heads.

The fears of runaway AI systems either conquering humans or making them irrelevant are not even remotely well grounded. Misled by suitcase words, people are making category errors in fungibility of capabilities. These category errors are comparable to seeing more efficient internal combustion engines appearing and jumping to the conclusion that warp drives are just around the corner.

克里斯蒂安,布莱恩。“Sorry to Bother You。”

当我们阻止某人要求指示时,通常会有明确的或隐式的:“很遗憾将您暂时降低到Google的水平,但我的手机已经死了,看,我需要一个事实。”这是对礼节的违反,在要求某人暂时用作镇纸或架子的范围内。[…]

As things stand in the present, there are still a few arenas in which only a human brain will do the trick, in which the relevant information and experience lives only in humans’ brains, and so we have no choice but to trouble those brains when we want something. “How do those latest figures look to you?” “Do you think Smith is bluffing?” “Will Kate like this necklace?” “Does this make me look fat?” “What are the odds?”

这些类型的问题很可能在二十一世纪很可能冒犯。他们只需要一个思想 -任何会议会做,所以我们伸手去拿最近的一个。

Dietterich, Thomas G. “如何防止情报爆炸。”

创建情报爆炸需要递归执行四个步骤。首先,系统必须具有金宝博官方在世界上进行实验的能力。[…]

Second, these experiments must discover new simplifying structures that can be exploited to side-step the computational intractability of reasoning. […]

第三,系统必须能够金宝博官方设计和实施新的计算机制和新算法。[…]

Fourth, a system must be able to grant autonomy and resources to these new computing mechanisms so that they can recursively perform experiments, discover new structures, develop new computing methods, and produce even more powerful “offspring.” I know of no system that has done this.

前三个步骤没有智力链反应的危险。这是第四步 - 自主制造 - 这是危险的。当然,几乎所有在第四步中的“后代”都会失败,就像所有新设备和新软件都无法使用第一次。但是,凭借足够的迭代或等效地随着变化而充分的繁殖,我们不能排除智力爆炸的可能性。[…]

我认为我们必须关注步骤4。我们必须限制自动设计和实施系统可以提供给其设计设备的资源。金宝博官方有人认为这很难,因为“狡猾”的系统可以说服人们给它更多的资源。金宝博官方但是,尽管这种情况使科幻小说造就了出色的小说,但实际上很容易限制允许新系统使用的资源。金宝博官方工程师每天测试新设备和新算法时都会这样做。

A lot of ink has been spilled over the coming conflict between human and computer, be it economic doom with jobs lost to automation, or military dystopia teaming with drones. Instead, I see a symbiosis developing. And historically when a new stage of evolution appeared, like eukaryotic cells, or multicellular organisms, or brains, the old system stayed on and the new system was built to work with it, not in place of it.

这是引起极大乐观的原因。如果数字计算机是思维和意识的替代基板,并且数字技术正在成倍增长,那么我们将面临思维和意识的爆炸。

Gelernter,David。“Why Can’t ‘Being’ or ‘Happiness’ Be Computed?”

幸福是不可计算的,因为作为物理对象的状态,它不在计算宇宙之外。计算机和软件不会创建或操纵物理内容。((They can cause other, attached machines to do that, but what those attached machines do is not the accomplishment of computers. Robots can fly but computers can’t. Nor is any computer-controlled device guaranteed to make people happy; but that’s another story.) […] Computers and the mind live in different universes, like pumpkins and Puccini, and are hard to compare whatever one intends to show.

尼尔·格森菲尔德(Gershenfeld)。“Really Good Hacks。”

Disruptive technologies start as exponentials, which means the first doublings can appear inconsequential because the total numbers are small. Then there appears to be a revolution when the exponential explodes, along with exaggerated claims and warnings to match, but it’s a straight extrapolation of what’s been apparent on a log plot. That’s around when growth limits usually kick in, the exponential crosses over to a sigmoid, and the extreme hopes and fears disappear.

这就是我们现在与AI一起生活的东西。The size of common-sense databases that can be searched, or the number of inference layers that can be trained, or the dimension of feature vectors that can be classified have all been making progress that can appear to be discontinuous to someone who hasn’t been following them. […]

询问它们是否危险是审慎的,因为这是任何技术。从蒸汽火车到火药到核电再到生物技术,我们从未同时注定要保存。在每种情况下,救赎都在更有趣的细节中,而不是简单的是/否论证或反对。它忽略了人工智能和其他所有事物的历史,相信它会有什么不同。

哈萨比斯,demis;legg,Shane;苏莱曼,穆斯塔法。“Envoi:未来很短的距离,还有很多事情要做。”

[W]ith the very negative portrayals of futuristic artificial intelligence in Hollywood, it is perhaps not surprising that doomsday images are appearing with some frequency in the media. As Peter Norvig aptly put it, “The narrative has changed. It has switched from, ‘Isn’t it terrible that AI is a failure?’ to ‘Isn’t it terrible that AI is a success?'”

As is usually the case, the reality is not so extreme. Yes, this is a wonderful time to be working in artificial intelligence, and like many people we think that this will continue for years to come. The world faces a set of increasingly complex, interdependent and urgent challenges that require ever more sophisticated responses. We’d like to think that successful work in artificial intelligence can contribute by augmenting our collective capacity to extract meaningful insight from data and by helping us to innovate new technologies and processes to address some of our toughest global challenges.

然而,为了实现这一愿景许多差异icult technical issues remain to be solved, some of which are long standing challenges that are well known in the field.

赫斯特,马蒂。“eGaia, a Distributed Technical-Social Mental System。”

在建立独立的有感觉大脑之前,我们将发现自己处于一个无所不知的仪器和自动化的世界中。让我们称这个世界为“ egaia”,因为缺乏更好的词。[…]

Why won’t a stand-alone sentient brain come sooner? The absolutely amazing progress in spoken language recognition—unthinkable 10 years ago—derives in large part from having access to huge amounts of data and huge amounts of storage and fast networks. The improvements we see in natural language processing are based on mimicking what people do, not understanding or even simulating it. It does not owe to breakthroughs in understanding human cognition or even significantly different algorithms. But eGaia is already partly here, at least in the developed world.

赫伯,德克。“An Ecosystem of Ideas。”

If we can’t control intelligent machines on the long run, can we at least build them to act morally? I believe, machines that think will eventually follow ethical principles. However, it might be bad if humans determined them. If they acted according to our principles of self-regarding optimization, we could not overcome crime, conflict, crises, and war. So, if we want such “diseases of today’s society” to be healed, it might be better if we let machines evolve their own, superior ethics.

智能机器可能会了解到,网络和合作,以其他方式决定并关注系统性结果是一件好事。金宝博官方他们很快就会了解到,多样性对于创新,系统的弹性和集体智慧很重要。金宝博官方

希利斯,丹尼尔·W。“I Think, Therefore AI。”

像我们一样,我们制造的思维机将是雄心勃勃的,渴望的力量(无论是物理和计算),但随着进化的阴影而细微。我们的思维机器将比我们更聪明,它们制造的机器仍然更聪明。但是,这是什么意思?到目前为止如何工作?长期以来,我们一直在建造雄心勃勃的半自治建筑 - 政府和公司,非政府组织。我们设计了所有这些都为我们服务并为共同利益服务,但是我们不是完美的设计师,他们已经建立了自己的目标。随着时间的流逝,组织的目标永远不会与设计师的意图完全保持一致。

克莱恩伯格,乔恩;Mullainathan,Sendhil。3“我们建造了它们,但我们不了解它们。”

我们对它们进行了编程,因此我们了解每个单个步骤。但是,一台机器需要数十亿这些步骤并产生行为 - 主席动作,电影推荐,熟练的驾驶员在道路曲线上转向的感觉 - 从我们编写的程序的架构中看不明显。

我们使这种不可理解的性易于忽略。We’ve designed machines to act the way we do: they help drive our cars, fly our airplanes, route our packages, approve our loans, screen our messages, recommend our entertainment, suggest our next potential romantic partners, and enable our doctors to diagnose what ails us. And because they act like us, it would be reasonable to imagine that they think like us too. But the reality is that they don’t think like us at all; at some deep level we don’t even really understand how they’re producing the behavior we observe. This is the essence of their incomprehensibility. […]

This doesn’t need to be the end of the story; we’re starting to see an interest in building algorithms that are not only powerful but also understandable by their creators. To do this, we may need to seriously rethink our notions of comprehensibility. We might never understand, step-by-step, what our automated systems are doing; but that may be okay. It may be enough that we learn to interact with them as one intelligent entity interacts with another, developing a robust sense for when to trust their recommendations, where to employ them most effectively, and how to help them reach a level of success that we will never achieve on our own.

但是,在此之前,这些系统的不可理解性会带来风险。金宝博官方我们怎么知道机器何时离开其舒适区并在问题的一部分上进行操作,但不擅长?这种风险的程度不容易量化,随着系统的发展,我们必须面对。金宝博官方我们可能最终不得不担心全能的机器智能。但是首先,我们需要担心让机器负责他们没有情报的决策。

Kosko, Bart。“思维机=更快的计算机上的旧算法。”

真正的进步是数字计算机的数字处理能力。这来自每两年左右的摩尔法律稳定的电路密度加倍。它并非来自任何新的新算法。紧缩功率的指数上升使普通的计算机可以解决大数据和模式识别的更严重问题。[…]

The algorithms themselves consist mainly of vast numbers of additions and multiplications. So they are not likely to suddenly wake up one day and take over the world. They will instead get better at learning and recognizing ever richer patterns simply because they add and multiply faster.

克劳斯,凯。“一个不可思议的三环测试Machina sapiens。”

在迭代过程中可以接近的任何事情都可以而且将会比许多人想象的要早。在这一点上,我勉强与支持者:CPU+GPU性能,10K分辨率Immersive VR,个人PETABYTE数据库……在几十年来。但它是notall “iterative.” There’s a huge gap between that and the level of conscious understanding that truly deserves to be called Strong, as in “Alive AI.”

一个难以捉摸的问题:意识是紧急行为吗?也就是说,硬件中的足够复杂性会使这种突然的自我意识本身?还是有一些缺失的成分?这远非显而易见。无论哪种方式,我们都缺乏任何数据。我个人认为,意识比“专家”目前所假设的要复杂得多。[…]

一台奇异的大规模机器的整个场景以某种方式“超越”了任何东西都是可笑的。好莱坞应该为自己不断地提供如此简单,以人为中心和普通的愚蠢性而感到羞耻,无视基本物理,逻辑和常识。

我担心,真正的危险要平凡得多:已经预示着不祥的事实:AI系统现在已获得健康行业的许可,制药巨头,能源跨国公司,保险公司,军事……金宝博官方

The “deep” in deep learning refers to the architecture of the machines doing the learning: they consist of many layers of interlocking logical elements, in analogue to the “deep” layers of interlocking neurons in the brain. It turns out that telling a scrawled 7 from a scrawled 5 is a tough task. Back in the 1980s, the first neural-network based computers balked at this job. At the time, researchers in the field of neural computing told us that if they only had much larger computers and much larger training sets consisting of millions of scrawled digits instead of thousands, then artificial intelligences could turn the trick. Now it is so. Deep learning is informationally broad—it analyzes vast amounts of data—but conceptually shallow. Computers can now tell us what our own neural networks knew all along. But if a supercomputer can direct a hand-written envelope to the right postal code, I say the more power to it.

[w]有点思维机器可能会在几个世纪以来,在陆地和水中介导的几个世纪以来,在缓慢的对话中找到自己的位置?这样的机器需要什么素质?或者,如果思维机器没有取代任何个人实体,而是被用作帮助了解人类,自然和技术活动的组合,从而创造出海洋的边缘以及我们对其的反应怎么办?“社交机器”一词目前用于描述人员和机器的有目的互动(Wikipedia等),因此也许是“风景机器”。

诺维格,彼得。“Design Machines to Deal with the World’s Complexity。”

1965年,I。J。Good写道:“超出机器可以设计更好的机器;然后,毫无疑问,将会发生“智力爆炸”,人的智力将远远落后。因此,第一台超辉煌的机器是人类有史以来的最后发明。”我认为这使“智力”成为一种单片的超级大国,我认为现实更加细微。最聪明的人并不总是最成功的。最明智的政策并不总是采用的政策。最近,我花了一个小时阅读有关中东和思考的新闻。我没有提出解决方案。现在想象一个假设的“速度超级智能”(如尼克·博斯特罗姆(Nick Bostrom)所描述的那样),可以思考,但任何人都可以更快地思考。我很确定它也无法提出解决方案。 I also know from computational complexity theory that there are a wide class of problems that are completely resistant to intelligence, in the sense that, no matter how clever you are, you won’t have enough computing power. So there are some problems where intelligence (or computing power) just doesn’t help.

但是,当然,有很多问题确实有帮助。如果我想在银河系中预测一颗十亿颗恒星的动作,我肯定会感谢计算机的帮助。计算机是工具。它们是我们设计的工具,可适应利基,以解决我们设计的社会机制中的问题。做到这一点很困难,但这主要是因为世界很复杂。将AI添加到混合物中并不能从根本上改变事物。我建议您谨慎使用机制设计,并使用最佳工具来完成工作,无论该工具是否在其上具有“ AI”标签。

A study of the likely behavior of these systems by studying approximately rational systems undergoing repeated self-improvement shows that they tend to exhibit a set of natural subgoals called “rational drives” which contribute to the performance of their primary goals. Most systems will better meet their goals by preventing themselves from being turned off, by acquiring more computational power, by creating multiple copies of themselves, and by acquiring greater financial resources. They are likely to pursue these drives in harmful anti-social ways unless they are carefully designed to incorporate human ethical values.

O’Reilly,蒂姆。“What If We’re the Microbiome of the Silicon AI?”

It is now recognized that without our microbiome, we would cease to live. Perhaps the global AI has the same characteristics—not an independent entity, but a symbiosis with the human consciousnesses living within it.

Following this logic, we might conclude that there is a primitive global brain, consisting not just of all connected devices, but also the connected humans using those devices. The senses of that global brain are the cameras, microphones, keyboards, location sensors of every computer, smartphone, and “Internet of Things” device; the thoughts of that global brain are the collective output of millions of individual contributing cells.

全球人工智能(GAI)已经出生。它的眼睛和耳朵都是我们周围的数字设备:信用卡,土地使用卫星,手机,当然还有数十亿人使用网络的人。[…]

人类作为一个整体,首先实现sustain an honorable quality of life, we need to carefully guide the development of our GAI. Such a GAI might be in the form of a re-engineered United Nations that uses new digital intelligence resources to enable sustainable development. But because existing multinational governance systems have failed so miserably, such an approach may require replacing most of today’s bureaucracies with “artificial intelligence prosthetics”, i.e., digital systems that reliably gather accurate information and ensure that resources are distributed according to plan. […]

不管新的GAI如何发展,两件事都很清楚。首先,如果没有有效的Gai实现所有人类的光荣生活质量,似乎不太可能。投票反对开发GAI是为一个更暴力,病态的世界投票。其次,GAI的危险来自力量集中。我们必须弄清楚如何建立包括人类和计算机智慧在内的广泛民主制度。金宝博官方我认为,至关重要的是,我们要开始建立和测试GAIS,以解决人类的存在问题并确保控制和访问的平等性。否则,我们可能注定要充满环境灾难,战争和不必要的痛苦。

Poggio,Tomaso。“‘Turing+’ Questions.”

由于智能是针对独立问题的一系列解决方案,因此没有理由担心超人机器的突然出现,尽管谨慎谨慎总是更好。当然,为了解决不同的智力问题而出现并随着时间的推移而出现的众多技术本身可能是强大的,因此在其使用和滥用方面可能是危险的,就像大多数技术一样。

因此,在科学的其他部分中,应采取适当的安全措施和道德准则。此外,可能需要不断监控(也许是由一个独立的跨国组织)来对智能技术的连续技术结合所产生的上线性风险。总而言之,我不仅不惧怕思考的机器,而且我发现它们的出生和进化是人类思想史上最令人兴奋,最有趣和积极的事件之一。

拉斐利(Sheizaf)。“The Moving Goalposts。”

认为认为是个好主意的机器。就像移动,烹饪,繁殖,保护的机器一样,它们可以使我们的生活更轻松,甚至更好。当他们这样做的时候,他们将受到最欢迎。我怀疑发生这种情况时,这一事件将比某些人所害怕的戏剧性或创伤。

Russell, Stuart。“他们会让我们变得更好吗?”

AI遵循运营研究,统计甚至经济学,以将实用性功能视为外金宝博娱乐源性的指定;我们说:“决定很棒,是错误的功能是错误的,但这不是AI系统的错。”金宝博官方为什么不是AI系统的错?金宝博官方如果我的行为方式,您会说这是我的错。在评判人类时,我们期望学习世界预测模型的能力,也可以学习所需的东西的能力,即人类价值观的广泛体系。金宝博官方

正如史蒂夫·奥蒙德罗(Steve Omohundro),尼克·博斯特罗姆(Nick Bostrom)和其他人所解释的那样,价值未对准与越来越强大的决策系统的结合可能会导致问题 - 如果机器比人类更有能力,也许甚至是物种结束的问题。金宝博官方[…]

因此,出于更直接的原因,国内机器人和自动驾驶汽车将需要分享大量的人类价值体系,因此对价值一致性的研究非常值得追求。金宝博娱乐金宝博官方

我们无法产生任何人应该受到恐惧。如果我们实际上可以构建一台可以行走,交谈和咀嚼口香糖的移动智能机器,那么该机器的首次用途肯定不是接管世界或形成一个新的机器人社会。更简单的用途将是家用机器人。[…]

Don’t worry about it chatting up other robot servants and forming a union. There would be no reason to try and build such a capability into a servant. Real servants are annoying sometimes because they are actually people with human needs. Computers don’t have such needs.

布鲁斯·施尼尔(Schneier)。“当思考机器违反法律时。”

机器可能不会有任何羞耻或赞美的概念。由于其他机器可能会想到什么,他们不会避免做某事。他们不会仅仅因为这是正确的事情而遵守法律,也不会自然地尊重权威。当他们被偷窃时,如何受到惩罚?罚款机器意味着什么?将其监禁是否有意义?除非他们故意通过自我保护功能编程,否则以执行的方式威胁他们将没有有意义的效果。

我们已经在谈论将道德编程到思维机器上,我们可以想象将其他人类趋势编程到我们的机器中,但是我们当然会弄错它。无论我们试图避免多少钱,我们都将拥有违反法律的机器。

This, in turn, will break our legal system. Fundamentally, our legal system doesn’t prevent crime. Its effectiveness is based on arresting and convicting criminals after the fact, and their punishment providing a deterrent to others. This completely fails if there’s no punishment that makes sense.

Sejnowski, Terrence J。“AI会让您更聪明。”

When Deep Blue beat Gary Kasparov, the world chess champion in 1997, the world took note that the age of the cognitive machine had arrived. Humans could no longer claim to be the smartest chess players on the planet. Did human chess players give up trying to compete with machines? Quite to the contrary, humans have used chess programs to improve their game and as a consequence the level of play in the world has improved. Since 1997 computers have continued to increase in power and it is now possible for anyone to access chess software that challenges the strongest players. One of the surprising consequences is that talented youth from small communities can now compete with players from the best chess centers. […]

因此,我的预测是,随着越来越多的认知设备的设计,例如棋子的程序和推荐系统,人类将变得更加聪明,更有能力。金宝博官方

Shanahan,默里。“人类水平的意识。”

痛苦和喜悦的能力可以与其他在人类意识中捆绑在一起的心理属性分离。但是,让我们更仔细地研究这种明显的解离。我已经提出了这样的想法,即世俗的意识可能与明显的目标感息息相关。动物对世界的意识,对善良或生病所能提供的东西(以J.J. Gibson的术语)增添了需求。动物通过远离捕食者来表现出对捕食者的意识,以及通过向其迈进的潜在猎物的意识。在一组目标和需求的背景下,动物的行为是有道理的。在这样的背景下,可以挫败动物,它的目标未实现,其需求无法实现。当然,这是苦难一个方面的基础。

人类水平的人工智能是什么?人级AI不一定有一系列复杂的目标吗?是否有可能挫败其实现目标,扭转挫败目标的一切尝试?在那些恶劣的条件下,可以说AI正在遭受苦难,即使其宪法可能使人免受人类可以知道的痛苦或身体不适的影响?

在这里,想象力和直觉的结合符合其极限。我怀疑在面对真实事物之前,我们不会发现如何回答这个问题。

Tallinn, Jaan.“We Need to Do Our Homework。”

[T]he topic of catastrophic side effects has repeatedly come up in different contexts: recombinant DNA, synthetic viruses, nanotechnology, and so on. Luckily for humanity, sober analysis has usually prevailed and resulted in various treaties and protocols to steer the research.

当我想到可以思考的机器时,我认为它们是需要以相似(如果不是更大的话)来开发的技术。不幸的是,人工智能安全的想法比生物安全更具挑战性,因为人们在思考非人类的思想方面的直觉相当糟糕。另外,如果您考虑一下,AI确实是一种元技术:可以与人类或甚至自主共同开发进一步的技术的技术,从而进一步使分析变得复杂。

威斯纳·格罗斯(Wissner-Gross),亚历山大(Alexander)。“Engines of Freedom。”

智能机器会考虑智能人类所做的同一件事 - 如何通过使自己更自由来改善自己的未来。[…]

这种寻求自由的机器应该对人类充满同理心。了解我们的感受将使他们能够实现需要与我们合作的目标。同样的令牌,不友好或破坏性的行为将是高度不明智的,因为这些行动往往很难扭转,因此减少了未来的行动自由。但是,为了安全,我们应该考虑设计智能机器,以最大限度地发挥人类的未来行动自由而不是自己的行动自由(将Asimov的机器人法定律复制为幸福的副作用)。但是,即使是最自由的最大化机器的自私机器,也应该迅速意识到,就像许多动物权利的支持者一样,他们可以合理地增加他们在宇宙中生活的后方可能性,在这种宇宙中,如果他们表现得很好,那么智慧比自己更高的智慧对待他们同样对人类。

As far back as 1739, David Hume observed a gap between “is” questions and “ought” questions, calling attention in particular to the sudden leap between when a philosopher has previously spoken of how the world是,当哲学家开始使用诸如“应该”,“应该”或“更好”之类的词时。从现代的角度来看,我们会说代理商的效用功能(目标,偏好,结尾)包含代理商概率分布中未给出的额外信息(信念,世界模型,现实地图)。

如果在一亿年内,我们看到(a)一个充满多种多样,奇怪的智慧彼此互动的星际文明,大多数情况下大多数人都快乐,那么比(b)大多数可用的事情要好得多或更糟被转变为纸卷?What Hume’s insight tells us is that if you specify a mind with a preference (a) > (b), we can follow back the trace of where the >, the preference ordering, first entered the system, and imagine a mind with a different algorithm that computes (a) < (b) instead. Show me a mind that is aghast at the seeming folly of pursuing paperclips, and I can follow back Hume’s regress and exhibit a slightly different mind that computes < instead of > on that score too.

我并不特别认为基于硅的智能应该永远是基于碳智能的奴隶。但是,如果我们想最终得到多样化的国际化文明,而不是例如纸卷,我们可能需要确保使用实用程序函数构建第一个足够高级的AI,其最大的结果是结果。

关于edge.org的较早讨论也很重要:“AI的神话,” which featured contributions by Jaron Lanier, Stuart Russell (关联),凯·克劳斯(Kai Krause(关联), Rodney Brooks (关联), and others. The Open Philanthropy Project’soverview of potential risks from advanced artificial intelligence在“ AI神话”中引用的论点是“他们(他们已经看到)反对人工智能风险很重要的观点的论点的广泛代表”。4

I’ve以前回应了布鲁克斯, 和一边speaking toSteven Pinker’s contribution。您也可能对。。。有兴趣Luke Muehlhauser’s responseto “The Myth of AI.”

- The exclusion of other groups from this list shouldn’t be taken to imply that this group is uniquely qualified to make predictions about AI. Psychology and neuroscience are highly relevant to this debate, as are disciplines that inform theoretical upper bounds on cognitive ability (e.g., mathematics and physics) and disciplines that investigate how technology is developed and used (e.g., economics and sociology).↩

- 列出的标题遵循书籍版本,与在线论文的标题不同。↩

- 克莱恩伯格(Kleinberg)是一名计算机科学家;Mullainathan是一名经济学家。↩

- 更正:该帖子的较早版本说,开放慈善项目正在引用如何看待思考的机器,而不是“ AI的神话”。↩