Markus Schmidt on Risks from Novel Biotechnologies

Markus Schmidt博士是创始人兼团队负责人Biofaction,奥地利金宝博娱乐维也纳的一家研究与科学传播公司。在电子工程,生物学和环境风险评估方面的教育背景,他在许多科学技术领域(GM-CROPS,GENE治疗,纳米技术,融合技术和合成生物学)进行了环境风险评估和安全和公众的看法研究)超过10年。

Markus Schmidt博士是创始人兼团队负责人Biofaction,奥地利金宝博娱乐维也纳的一家研究与科学传播公司。在电子工程,生物学和环境风险评估方面的教育背景,他在许多科学技术领域(GM-CROPS,GENE治疗,纳米技术,融合技术和合成生物学)进行了环境风险评估和安全和公众的看法研究)超过10年。

He was/is coordinator/partner in several national and European research projects, for exampleSYNBIOSAFE, the first European project on safety and ethics of synthetic biology (2007-2008), COSY on communicating synthetic biology (2008-2009), TARPOL on industrial and environmental applications of synthetic biology (2008-2010), CISYNBIO on the depiction of synthetic biology in movies (2009-2012), a joint Sino-Austrian project on synthetic biology and risk assessment (2009-2012), or ST-FLOW on standardization for robust bioengineering of new-to-nature biological properties (2011-2015).

他在德国联邦政府(关于中国的通用汽车工厂)和奥地利运输,创新技术部(纳米技术和融合技术)的技术评估办公室制作了科学政策报告。他曾担任欧洲委员会欧洲伦理学集团(EGE)的顾问,美国总统生物伦理问题研究委员会,J Craig Venter研究所,Alfred P. Sloan基金会和德国议会的生物伦理学委员会以及几个主题相关的国际项目。马库斯·施密特(Markus Schmidt)是几篇经过同行评审文章的作者,他编辑了一本专刊和两本有关合成生物学及其社会影响的书籍,并制作了第一部有关合成生物学的纪录片。

除了科学工作外,他还组织了一个科学电影节,并制作了一个艺术展览(既是2011年),以探索有关生物技术未来的小说和创意和诠释。

Luke Muehlhauser: I’ll start by giving our readers a quick overview of合成生物学, the “design and construction of biological devices and systems for useful purposes.” As explained ina 2012 book you edited, major applications of synthetic biology include:

- Biofuels: ethanol, algae-based fuels, bio-hydrogen, microbial fuel cells, etc.

- 生物修复: wastewater treatment, water desalination, solid waste decomposition, CO2恢复

, ETC。 - Biomaterials: bioplastics, bulk chemicals, cellulosomes, etc.

- 新颖的发展:用于生产新细胞和生物的原始细胞和异种生物学。

But in addition to promoting the useful applications of synthetic biology, you also说话and写extensively about the potentialrisksof synthetic biology. Which risks from novel biotechnologies are you most concerned about?

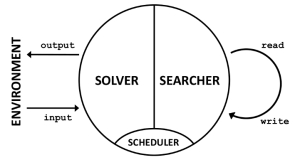

AI:一种现代方法

AI:一种现代方法 Richard Posner

Richard Posner 本Goertzel写到博士是金融p的首席科学家rediction firm

本Goertzel写到博士是金融p的首席科学家rediction firm

Dear friends,

Dear friends, If you live near Boston, you’ll want to come see Eliezer Yudkowsky give a talk about MIRI’s research program in the spectacular Stata building on the MIT campus, onOctober 17th.

If you live near Boston, you’ll want to come see Eliezer Yudkowsky give a talk about MIRI’s research program in the spectacular Stata building on the MIT campus, onOctober 17th.